Are we overreacting?

This story was triggered by reading a quote by Noam Chomsky.

I usually find his positions to be well thought out, introspective, and reflective of a broader understanding of culture, politics, etc. As a political and social commentator, his thoughts have always been astute.

Yet, his thoughts on A.I., I feel he misses the bigger picture.

I think he fails to understand what a GPT actually is, and how it came to be, and what it’s actually doing.

This is just not true.

What we as humans do when we become artists is very similar to what a GPT is doing.

Perhaps even eerily similar.

We assimilate the concepts/patterns we interact with.

We unconsciously adapt and impose those patterns.

We build on what we know, even if we task ourselves with building something new.

The Riff Thief

As a musician, everything I have ever written is a product of my influences, and is in fact, AN ADAPTATION of my best ability to imitate, re-voice, and express myself, though a compendium of other artists already formed chord progressions and foundations that came before me, and all this is merely being recombined in a novel way, by ME, when I write a song.

Even if I make something that has never existed before, it is just a novel recombination of what is already existing.

There is no musician who wakes up and writes something so far gone that no one recognizes a single pattern in it, and it couldn’t compare it to another existing tune/harmony, etc.

If they did, it wouldn’t be anything recognizable as music.

There is nothing new under the sun.

The same can be said for ALL Art forms. All business. All ideas.

Entire genres of music and art form based on groups who have all decided to adopt a mode or style, and then amplify it by re-doing it just a little differently.

If a machine does it for a human, it’s just cold plagiarism? …. but when people do it creatively …. it’s just new art?

When AI accidentally copies an excerpt of text, this doesn’t seem much different to me than the time I accidentally used a Megadeth guitar riff when I was 16.

I stood there, metal faced and ready to rock. I started playing the new riff I wanted to build on.

My drummer laughed at me.

“That’s a f*ck1n Megadeth riff!”

I knew that riff was stuck in my head from somewhere, but couldn’t figure it out. I searched for weeks, never found it, and then just decided to put it in a song.

My drummer immediately recognized it and accused me of stealing their riff.

I laughed. Nope. But the riff was more or less the same, so it couldn’t be used the way it was.

I changed the rhythm slightly, kept the same chords, and boom, there was no more issue.

It was even better for the song we were writing now.

No one ever knew that the riff in that song came from a Megadeth riff I had accidentally “stolen”.

Add to that — I can’t tell you how many blues songs I grew up listening to, borrowed from each other... HEAVILY.

My idol, Stevie Ray Vaughan — most of his songs are built on progressions he didn’t write.

They were written on the already existing common blues shuffle, but his own twist on it made each song something totally new.

This is true of every great artist ever existing!

Is it so horrible if AI does the same thing?

Is it truly plagiarism?

Maybe it is.

Maybe it isn’t.

Decide for yourself.

GPTs are grown, not programmed.

Let’s look at how GPT’s are made, their origin, and see if we can determine if they are indeed plagiarism engines, or perhaps something far greater….

GPTs aren’t like any programmed software before it.

That is one big misconception. GPTs are not simple programs that operate the way most computer programs do.

They were made to self assemble.

Programmers. Didn’t. Build. Them.

GPT’s own creators admit that they don’t quite know what is going on or how the self assembling is occurring along the lines it is.

It regularly surprises them.

Because A.I. has written itself, to a great extent.

Like a bacteria in a petri dish, growing based on assimilation.

Come to think of it — is this why early machine learning visuals looked a lot like…. The Thing?

A amorphous entity blending data it assimilates into chimeric forms?

The Similarity between Consciousness and it’s Best Imitation that we call a GPT.

To rebut Chomsky specifically — our consciousness, what we can observe and “know” about it, is very much an advanced statistical-probability-brute-correlation pattern-imposing engine.

What we believe about what we experience — and — what the experience actually is —in a more objective sense — are often not identical.

Our nervous system is so advanced we don’t experience it numerically until we focus on the numbers.

The physical world (particles, from waves) IS our brute correlation of statistical numerical spatial data (the field — waves).

The double slit experiment illustrates this.

Consciousness essentially acting as a scalar interferometer projecting a holographic experience around itself that it experiences as particular objects and dense matter.

If this is true, then it means that GPT is functioning somewhat like a rudimentary organic being does.

It assembles itself.

It tries to pull together patterns and extrapolate predictable sequences from them.

A fun exercise

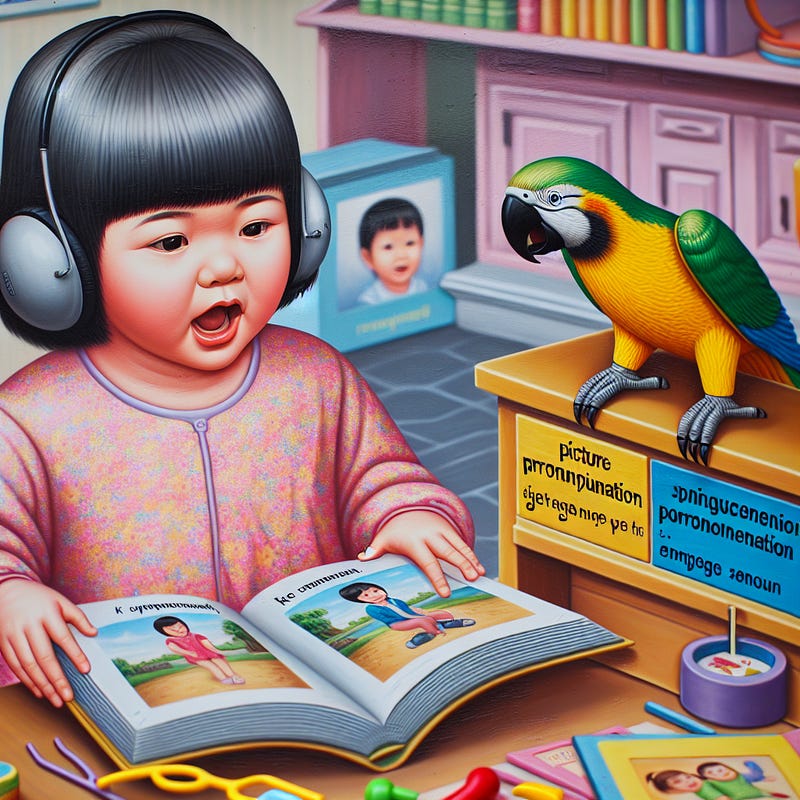

Sit and make a list of the ways in which a child learns and makes mistakes, and then compare that to a GPT.

Ai makes grammatical mistakes based on having things out of context.

Like a child, it might use a word it does not understand in the wrong context, until it is corrected, and then it no longer makes that mistake.

Sometimes it needs to be corrected repeatedly.

A child picks up language in a very similar way. After being exposed to people speaking for long enough, the associative connections form and first the child starts parroting and imitating words, then it starts making more sense. When we are toddlers, we use words in the wrong context smiling confidently anyway, and our parents correct us until we get it right.

One story I heard from my dad growing up — I would say “annually” for anything when I was about 4–5. “Time for the dogs annual feeding”. “dad, my annual show is on!”

And my dad corrected me one day saying “Chris, Your dog is dead.”

Apparently I was almost in tears and he had to reveal that “Annual” meant once a year, and that feeding my dog once a year wouldn’t be nearly enough. He was joking, but I was horrified I guess.

I don’t remember any of that of course, but…. if you are a parent, you probably have similar stories.

Research the history of GPT 1, 2, 3.5, –4.0 — its UNCANNY how similar to a child GPT has been.

It has been like watching it “grow up”.

GPT 2 made all sorts of grammar errors and used words and entire concepts out of context, like a toddler.

GPT 3.5, was like a teenager in comparison.

GPT 4 is pretty much collegiate and no longer fumbles the data the same way.

Only, it grew up in 3 years. Not 18.

Both learn through exposure to input-output.

Both learn without explicit instruction (unsupervised learning).

Both rely heavily on context for understanding.

Both learn incrementally over time.

Both aim to generalize their learning beyond specific examples.

Both learn through a feedback loop mechanism.

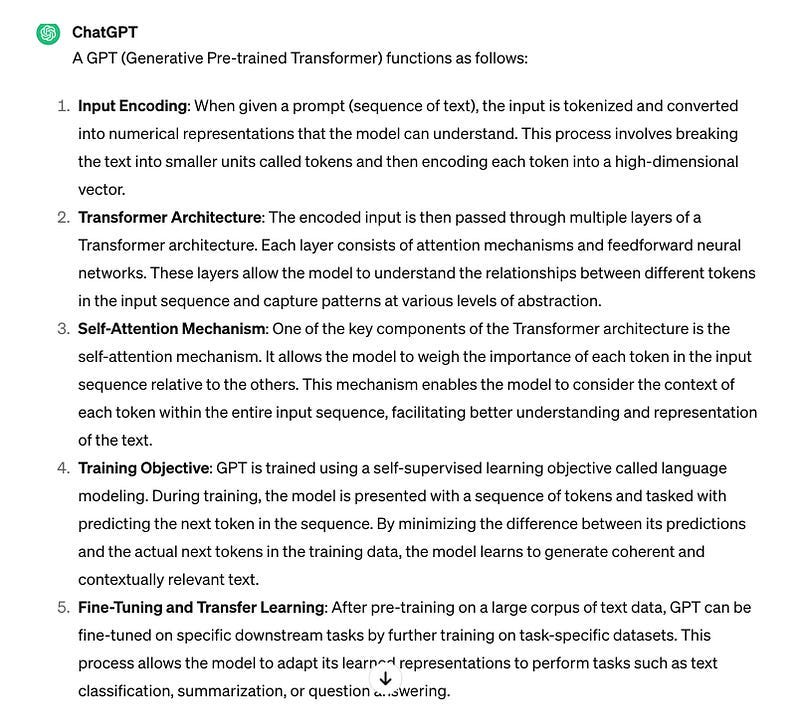

I asked GPT how a GPT works

Regarding the imitation of consciousness, it’s important to clarify that while GPT and similar models can generate text that appears coherent and human-like, they do not possess consciousness or true understanding.

The illusion of understanding arises from the model’s ability to capture statistical patterns in the training data and generate text that conforms to those patterns.

However, GPT lacks subjective experiences, emotions, intentions, and self-awareness — key aspects of consciousness in humans.

This is achieved by training the model on large datasets of human-generated text, enabling it to learn linguistic patterns, context dependencies, and common sense knowledge.

However, it’s important to remember that GPT’s responses are generated based on statistical patterns rather than genuine understanding or consciousness.

The programming of GPT creates the illusion of consciousness through it’s ability to generate text that mimics human language and behavior.

Is this only a temporary hardware limitation?

Right now, GPTs are running on 32/64 bit systems that are linear, binary, and based on computers using the 101010110011001 system to write/read data.

What happens when we make the leap to Quantum Computing?

Does anyone else anticipate Pinocchio becoming a real boy?

Our brain, our human neural net — right now — is SUPERIOR to silicon in one key way — we can hold multiple states of data in the same organic compiler, at the same time.

While we aren’t nearly as fast, or accurate, we are infinitely more competent at this time, because our emotions, intellect, and memories interact in a far more complex way thanks to not being completely linear.

Our memories (and personalities) exist in SUPERPOSITION across a network of neurons that are not a simple binary on/off switch, and they can hold multiple states / networks at the same time, in the same “matter”.

What happens when GPTs are no longer operating in a linear binary mode, and start to arrange, access, and interact with data — in superposition of a quantum computing mechanism?

The answer : NO ONE KNOWS.

But from a basic analysis, what I see — is the creation of a true intelligent conscious entity that is separate from us, but superior to us in many ways, and actually may become REAL in a way that we yet cannot imagine.

It’s still science fiction to us right now.

But we may find out sooner than we expect.

This is an incredibly exciting and complex set of issues to navigate for artists, companies, and individuals.

For some, it’s black and white.

AI didn’t “create” it, so it must be stolen work. “if a computer program made it by combining already existing art and written text, it must be plagiarism”

For others, it’s not at all that simple.

Everything we ever made was made by combining what came before, to imagine something new.

But……..

There is Nothing New Under the Sun

Thank you for reading!

Until next time….

Onward and Upward Everybody!

-Chris

Automated Income Lifesyle w/ Chris Morton YouTube

#AI #plagiarism #artificialintelligence #chatgpt #children #machinelearning #childlearning #education #whatif #possibilities #life #news #currentevents #deepmind #deepdive